With the rapid development of artificial intelligence technologies, social engineering is more dangerous than ever. As CNN reports, in 2024, a multinational design and engineering company called Arup lost $25 million due to its employees falling for a deepfake impersonation scam. It wasn’t malware or a system vulnerability that let the attackers in; it was trust.

Social engineering works because it targets people, not technology. While firewalls and endpoint protection can block most technical attacks, human curiosity and pressure are harder to defend.

According to the 2025 Verizon Data Breach Investigations Report, around 60% of breaches involve the human element and occur through such techniques as phishing, stolen credentials, or manipulation.

This article explores the psychology of social engineering, why attackers use it, the human instincts they exploit, and how individuals and organizations can strengthen their defenses against these subtle yet devastating attacks.

Why Do Cyber Attackers Use Social Engineering?

Cyber attackers turn to social engineering because it’s efficient, scalable, and remarkably effective. Compared to technical hacks that require skill, time, and specialized tools, manipulating people often costs almost nothing and brings faster and more profitable results.

Human Weakness

Technology keeps evolving, but humans remain the weakest link in the security chain. Even with multi-factor authentication, advanced firewalls, and constant monitoring, a personalized message or convincing phone call can still open the door to a breach.

Attackers know that trust, fear, and urgency are powerful levers, and that a single distracted click can let them through even very advanced digital protection.

Large Scale Campaigns

Another reason is scale. Group phishing and large-scale social engineering campaigns can target hundreds or thousands of individuals simultaneously, relying on predictable human behavior.

Most people will at least glance at a message from a “manager,” “vendor,” or “IT department.” It only takes one response for attackers to gain access, so understanding social engineering psychology is essential for protecting your business from online threats.

The Psychology of Social Engineering

Social engineering isn’t about breaking systems, but breaking trust. Attackers understand that human behavior follows predictable psychological patterns, and they exploit these instincts to manipulate decisions.

Which Elements of Human Psychology Do Social Engineering Attacks Try to Appeal To?

Social engineers succeed because they understand what drives human behavior. By exploiting basic psychological instincts, they can influence people to act against their own best interests without realizing it.

- Trust and Authority: People naturally defer to perceived authority, so threat actors often impersonate executives, banks, or government agencies to appear credible. A message that “looks official” can instantly lower skepticism and prompt action.

- Fear and Urgency: Attackers know fear overrides logic. Messages warning of locked accounts, missed deadlines, or financial loss push victims to act quickly without verifying details.

- Curiosity: Humans are wired to seek information. Clickbait headlines or “confidential document” alerts exploit curiosity, leading users to malicious links or downloads.

- Greed and Reward: Promises of easy gains (like gift cards, prizes, or exclusive discounts) trigger excitement and impulsive clicks. Attackers rely on people wanting to believe the opportunity is real.

- Obedience to Hierarchy: In corporate environments, employees often follow orders from perceived superiors without question. Well-fabricated messages from “the CEO” or “finance director” exploit this respect for hierarchy to authorize fraudulent payments or data transfers.

Cognitive Biases and Behavioral Science in Play

Attackers also exploit cognitive biases, which are the mental shortcuts that influence how we perceive and react. These biases shape responses in ways that social engineers can predict and manipulate.

- Reciprocity Principle: If someone offers help or a favor (like “I fixed your account issue”), people feel obligated to respond or return the favor, without realizing it can be a trap.

- Scarcity and FOMO: Messages with phrases like “limited time offer” or “final notice” create urgency and trigger the fear of missing out, reducing critical thinking.

- Confirmation Bias: People tend to trust information that supports their existing beliefs or fears. Attackers use this by sending messages that “feel right,” such as confirming a fake invoice or alerting about a security concern.

- Social Proof: Humans rely on others’ behavior to validate decisions, so threat actors often fabricate endorsements, testimonials, or peer approvals to make their requests appear legitimate.

Common Psychological Triggers and Related Attack Tactics

Psychological Trigger | Behavioral Principle | Common Attack Tactic | Example Scenario |

Trust & Authority | Obedience to perceived power | CEO fraud, business email compromise | “Please wire $25,000 to our vendor immediately – CEO” |

Fear & Urgency | Emotional pressure | Phishing, fake account alerts | “Your account will be suspended in 24 hours” |

Curiosity | Information-seeking instinct | Clickbait links, malicious attachments | “Exclusive: confidential HR report inside” |

Greed & Reward | Reward anticipation | Lottery scams, fake giveaways | “You’ve won a $500 gift card – claim now” |

Reciprocity | Social exchange | Pretexting, help-desk scams | “I helped you with that issue, can you verify your password?” |

Scarcity & FOMO | Limited-time bias | Discount scams, urgent calls to action | “Offer ends in one hour – renew now!” |

Confirmation Bias | Belief reinforcement | Invoice scams, “security update” emails | “Your account shows unusual login activity” |

Social Proof | Desire for validation | Fake reviews, impersonation of colleagues | “John from IT approved this update, too” |

Types of Social Engineering Attacks Explained

While the methods vary, they all rely on the same foundation: trust, fear, and curiosity. Below are the most common types of social engineering attacks and how psychology makes them work.

Phishing (Email, SMS, and Group Phishing)

Phishing attacks remain the most prevalent form of social engineering. Threat actors send fraudulent messages that appear to come from trusted sources (like banks, service providers, or even internal departments) to trick recipients into clicking malicious links or sharing credentials.

There are two main approaches:

- Personalized (spear phishing): Targets individuals with tailored messages that reference real names, roles, or recent activities.

- Bulk or group phishing: Targets many people at once, relying on probability—if even a small percentage responds, the attack succeeds.

Phishing exploits trust and urgency. Victims often react emotionally (“Your account will be locked in 24 hours”) rather than rationally.

Real example: In 2024, a phishing campaign mimicking Microsoft 365 login portals targeted numerous organizations globally and allowed attackers to steal at least 5,000 Microsoft credentials from 94 countries.

Impersonation and Business Email Compromise (BEC)

In impersonation scams and business email compromise (BEC) attacks, threat actors pose as executives, partners, or vendors to pressure employees into wiring money or sharing sensitive data.

This tactic plays directly on authority bias—people’s instinct to obey those they perceive as senior or legitimate. Messages often imitate tone, signature, and even writing style to appear authentic.

Real example: In 2017, Save the Children Foundation was scammed via BEC when attackers accessed a legitimate employee’s email and sent fake invoices for non-existent suppliers. The charity transferred over $1 million, with most recovered due to insurance.

Pretexting and Vishing

Pretexting involves creating a believable backstory to obtain confidential information. The attacker might pose as IT support, HR, or a third-party vendor to justify requests for credentials or personal data. Vishing (voice phishing) extends this to phone calls, where tone and confidence enhance credibility.

These attacks manipulate the reciprocity and trust principles: people want to be helpful, especially to someone who sounds professional or familiar.

Real example: TechCrunch covered the June 2022 incident where attackers socially engineered a Twilio employee through voice phishing, which enabled access to customer data. Twilio identified and eradicated this access within 12 hours and notified the affected customers.

Baiting and Curiosity-Driven Attacks

Baiting is based on curiosity and greed and typically involves enticing users with something desirable, like free software, exclusive files, or even physical USB drives labeled “Confidential.” Once opened, malware executes, or credentials are harvested.

Real example: A 2016 study conducted by researchers from Google, the University of Illinois Urbana-Champaign, and the University of Michigan showed that 48% of people who found random USB drives plugged them in out of curiosity—many opened files without hesitation.

SEO Poisoning and Fake Support

Attackers increasingly exploit search engine trust to spread malicious links through SEO poisoning. They push fake websites to the top of search results for common queries like “download invoice template” or “antivirus support.” When users click, they unknowingly install malware or call fake support numbers.

Real example: In 2024, Dark Reading reported that threat actors abused Google Ads to make users download data-stealing malware instead of popular collaborative communication tools like Slack or Notion.

Social engineering’s diversity makes it hard to stop without a comprehensive approach. Each tactic exploits a unique psychological weakness, and that’s why human awareness and cybersecurity services (like continuous executive digital protection from VanishID) are vital for keeping your business safe.

More Real-World Examples of Social Engineering Psychology in Action

Here are some more examples of successful attacks based on social engineering psychology:

- In late 2021, a popular stock trading app called Robinhood experienced a significant social engineering attack on its customer support infrastructure. Attackers called their support representatives, tricking them into installing remote access software on their computers, which allowed them to access sensitive customer data internally. The breach exposed personal information of millions of customers, including email addresses, names, dates of birth, phone numbers, and some account details.

- In July 2020, Twitter suffered a coordinated social engineering attack targeting about 130 high-profile verified accounts, including Elon Musk, Barack Obama, and Joe Biden. The attackers manipulated Twitter employees via phone spear phishing to gain access to internal administrative tools, posting a scam promising to double any bitcoin sent. Over $100,000 in bitcoin was fraudulently obtained before the platform removed the scam tweets.

- In September 2023, MGM Resorts was hit by a sophisticated social engineering attack conducted by the Scattered Spider hacking group, in collaboration with the ALPHV ransomware gang. The attackers harvested employee information from LinkedIn, then impersonated one of them to call MGM’s IT help desk and request password resets, exploiting trust and authority bias.

How to Resist Social Engineering Attacks

Resisting manipulation requires awareness, deliberate skepticism, and a culture that prioritizes verification over convenience.

Individual Defense Strategies

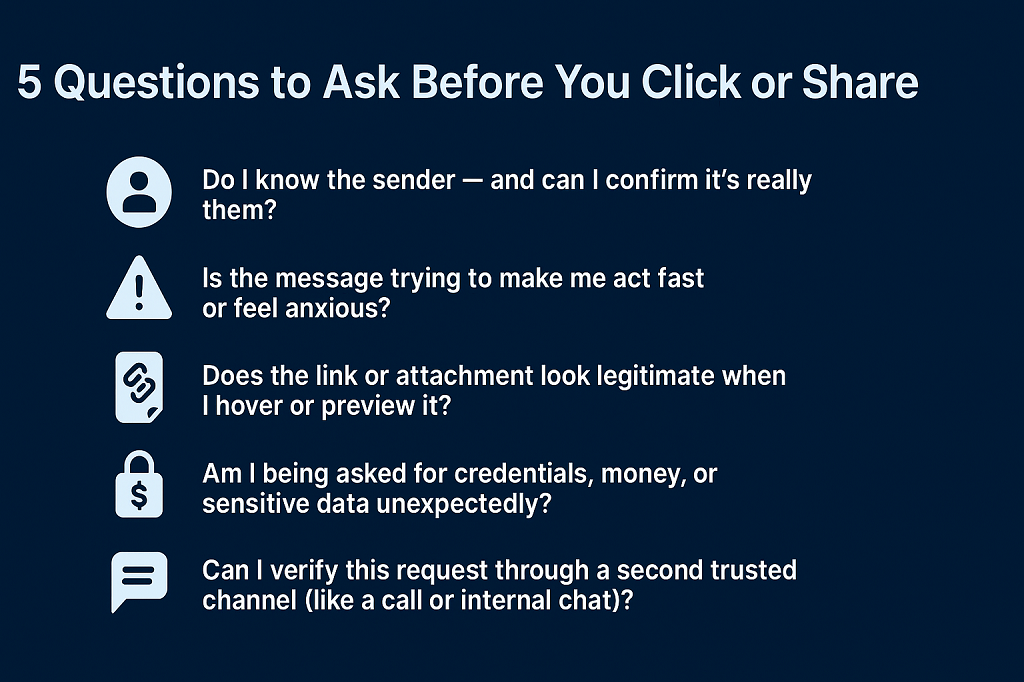

- Slow down and verify: It’s always good to pause and think before reacting. If a message triggers emotion or urgency, take a moment to confirm it through another channel. Remember: first validate, then react.

- Check sender details and links: Look closely at email addresses, domain names, and URLs. Hover over links before clicking. A minor misspelling or an unfamiliar subdomain is often a giveaway.

- Develop healthy skepticism: Assume that unexpected messages asking for action (especially involving money, credentials, or personal data) could be fake. Healthy doubt keeps you one step ahead of manipulation.

Organizational Defense Strategies

- Invest in digital footprint protection and identity monitoring: Protecting exposed employee data helps prevent threat actors from gathering intelligence for impersonation or phishing. Services like VanishID offer data broker removal and dark web monitoring, reducing the personal information attackers can exploit.

- Deploy anti-smishing and credential monitoring tools: SMS phishing (smishing) is on the rise. Monitoring for leaked credentials and malicious text campaigns can help identify threats before they reach employees.

- Train and simulate regularly: Conduct ongoing awareness training and phishing simulations to reinforce real-world decision-making under pressure. Make security part of daily culture, not an annual checklist.

- Adopt a zero-trust mindset: “Verify, don’t assume” should be the guiding principle. Every request for access, data, or approval should be authenticated, even when it appears to come from a trusted internal source.

The Psychology of Resistance

Social engineering succeeds when people act on emotion instead of reason, so building psychological resilience can help counter this reflex.

- Build critical thinking habits: Encourage questioning over compliance. When employees learn to pause, analyze, and confirm, the manipulation cycle breaks.

- Encourage “pause before action”: Create workflows that reward careful verification instead of speed. A short delay can stop a million-dollar mistake.

- Promote a safety culture: Employees should feel comfortable questioning suspicious requests from superiors without fear of blame or delay.

The Future of Social Engineering

Social engineering is evolving faster than most organizations can adapt. What once relied on crude emails or generic scams is now powered by artificial intelligence, enabling more realistic, personalized, and convincing attacks.

AI-powered phishing and deepfakes are already redefining what “believable” looks like. Attackers can use generative AI to convincingly imitate writing styles, create synthetic voices, or produce realistic video impersonation. These tools make deception scalable and nearly indistinguishable from genuine communication.

As a result, it’s essential to focus on proactive human risk intelligence—identifying where and how employees, executives, and brands are being impersonated or mentioned before attacks occur. This includes monitoring for exposed data, leaked credentials, and suspicious domain registrations.

Yet even with advanced technology, psychological awareness remains the foundation of defense. Tools can filter messages, but only a mindful human can question what seems “off.” As AI blurs the line between real and fake, critical thinking, skepticism, and continuous education will be the most reliable protection against manipulation.

Conclusion

Understanding the psychology of social engineering is essential for working on a practical defense strategy. Every successful phishing email, impersonation scam, or insider breach begins with the same principle: manipulating human emotion and trust. Recognizing those triggers allows organizations to anticipate, question, and resist them.

True resistance comes from a combination of awareness, data protection, and continuous training. When people know how they’re being targeted and have the confidence to verify before acting, the effectiveness of social engineering is significantly lower.

Technology alone can’t eliminate human risk, but proactive monitoring and identity protection can make manipulation far harder. Check out VanishID’s solutions that help keep personal and location details out of attackers’ hands so that your executives and whole teams along with their families can stay safe.

Andrew Clark

Head of Growth Marketing, VanishID

Andrew is a digital marketing strategist specializing in demand generation and customer acquisition for B2B SaaS and cybersecurity companies. He focuses on understanding customer pain points in executive protection and digital footprint management. Prior to VanishID, Andrew led digital marketing at various startups and enterprises, building full-funnel campaigns and launching websites across cybersecurity, cloud simulation, and healthcare sectors. He holds a BA in Communication and Minor in Psychology from the University of Minnesota Duluth.

All Posts